The Roaring 2020s: Dance of AI policy.

Who’s Hosting? Who’s Invited? Who’s the DJ?

By claudia barbara esther, March 15, 2022.

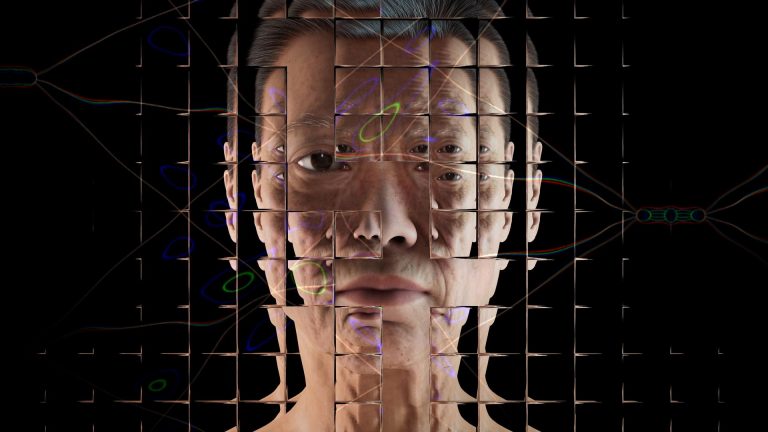

Artificial Intelligence (AI) systems are being built at a rapid pace, but they don’t benefit all societal groups equally. To address it, we must reevaluate innovation, expertise, and policies aimed at ensuring responsible development and fair access and use of sociotechnical systems. A blog post on how to deliberate the AI governance debate in the European Union.

Many issues related to responsibility in AI have been addressed through non- and partially-binding agreements (e.g., “AI ethics”). The EU AI Act, proposed by the European Commission in April 2021, aims to bring clarity to this mess and provide the first binding framework on how to govern AI.

AI systems are embedded in numerous facets of private and public spheres, ranging from seemingly harmless applications, like translation services, to those with far-reaching societal consequences as in e-healthcare, social security, or predictive policing. While other inventions are frequently governed under product liability standards, governing AI systems is fundamentally different. Why? Because it is ingrained in products and services and continues to learn in the field, it can be tricky to understand the AI systems’ materialities.

The way AI systems are framed oddly enhances nuances of immateriality and abstraction. As AI is primarily referred to as a technical domain, such systems are predominantly presented in a way that can almost exclusively be understood by techies in Silicon Valley, wearing hoodies, eating fancy poké bowls, and listening to experimental ambient music sets. By virtue of this, the dialogue is arbitrarily divided into technical benefits and constraints, leaving externalities and societal implications hardly touched and, if at all, assigned to somewhat isolated AI ethics and auditing teams.

Meanwhile, there is a growing societal desire to hold big tech institutions developing AI accountable and responsible. Yet, the debate’s overemphasis and glorification of technical expertise still grooves into policymakers‘ agendas. Governing AI, therefore, translates to a culture of epistemic injustice which gives significant agency to a few already privileged and powerful actors.

The Commission’s proposed AI Act positions the EU to set the standard for AI regulation globally. By using an analogy, I hope to stimulate critical thinking on how this increasing societal demand for responsibility and accountability forms thus in regulations and is negotiated among actors. Like organising a dance party, policymaking requires a check-list of decisions and agreements that are made to pursue specific goals. In what follows, I will draw parallels between AI policymaking and how, to me, comparable questions are posed when planning a party.

The dance party. Who sets the tone, who’s the DJ, and who attends?

First and foremost, there is the music. My friend Lukas, who regularly fills dance floors and open-air stages, once received a review: “The music was banger – just what we needed! Lukas played songs we requested and some we didn’t know that were perfectly in sync with genres we love. He did an incredible job of keeping our folks on the dance floor – they weren’t afraid to bust out iconic dance moves!“

Picture the party where Lukas is djing: decisions are made beforehand on sounds that are played, whether it is high-pitched disco tunes, effortlessly flowing downtempo, or techno trance. The music determines who wants and can participate. My 79 yo grandmother Ruth certainly doesn’t like attending a rave in Berlin’s famous techno club Berghain. Likewise, my friend Isa (31), a queer trance DJ, won’t feel comfortable joining brass bands in local smalltown family-run restaurants where exclusively men listen to traditional non-electronic music with particularly controversial lyrics.

AI policymaking processes, also, imply political choices through mechanisms of implicit or explicit exclusion that we detect while picking party DJ(s) and venues.

EU AI policy party. Setting the tone, identifying the problem, and deciding who joins.

I question who is hosting, who is invited to frame, and who has agency in this conversation. Who else does the Commission invite to the table besides techies, policymakers, and lawyers? Who stays unpresented during the problem-definition phase? How do multiple contradictory interpretations of policy problems and solutions play together? This policy paradox on definitions of problems and possible solutions isn’t new. It is the fundamentals of such events that define solution spaces and outcomes, and concurrently, these are the most significant political choices made.

I acknowledge that the EU AI Act has some ambiguity regarding the commission’s intended scope, intent, and its goal. Is it the consumer trustworthiness of AI and widespread societal acceptance? Concerns about responsibility versus liability? Incentives to get ahead in the global AI race? Or to push for the deployment of AI systems in public sectors? As I mentioned in my analogy, the scope, intent, and goals, like the music played at a party, decide who should, can, and is empowered to attend.

If I could organise the party, …

… the most critical step is one ahead. On my own, I can’t launch perfect action plans, send out nicely crafted invitation cards, and host the most inclusive dances. Rather, I will invite a range of individuals from science, business, government, society, Isa, and my grandma Ruth to organise the event. I won’t scout DJ ahead, but will instead let a heterogeneous committee decide upon music genres and respective DJs. Starting from scratch will almost certainly imply that the party will take longer to plan and execute. Nevertheless, I am convinced that if we push science, industry, public sector, society, Ruth, and Isa to work in tandem, we will end up with a better, diversified, and resilient set of parties (and policies).

Ultimately, I urge that AI shouldn’t be „done to society“ by highly expertised committees of techies, but should emerge in deliberate and participatory processes determining which systems are viewed and regarded as valuable by society and which aren’t.